These other types of blending can be used in conjunction with the method I’ve already described, provided you are careful about keeping motion and aspect ratios synchronized between applications. There are three other methods I’d like to briefly touch on. This is the most straightforward way to blend, but it’s not the only way. These outputs are then blended according to user preference in a video editing application like Resolve or Premiere. The blending method I’ve discussed so far starts with one video and creates multiple upscaled outputs in a single application.

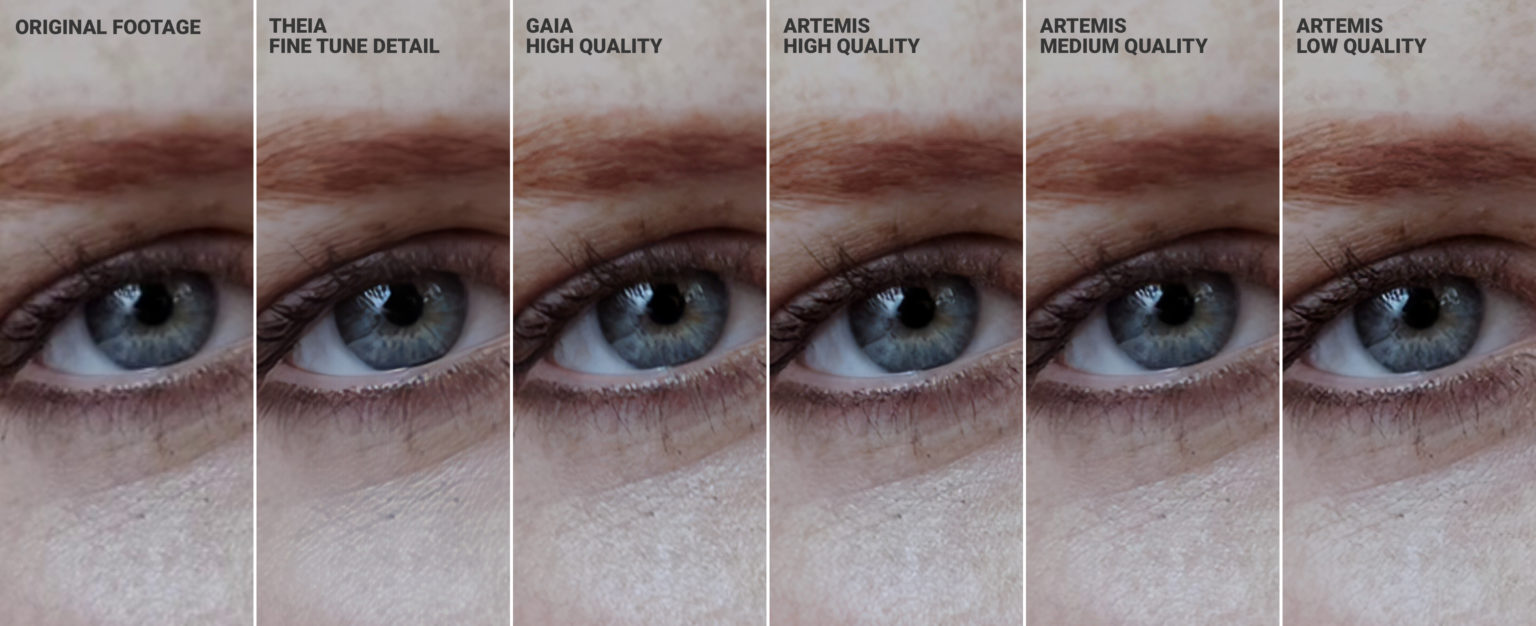

Blend Various Outputs (and Possibly Inputs) TVEAI tends to perform better when grain and noise are injected, and it likes QTGMC’s injected grain and noise better than filters like GrainFactory or AddGrainC. If you use QTGMC, I recommend experimenting with the NoiseRestore and GrainRestore functions at settings of 0.25 – 1.25, depending on your content. I also recommend allowing TVEAI to handle sharpening in most cases you’ll get less ringing that way. AviSynth and VapourSynth are also better at handling DVD footage from television shows that mixed both 23.976 fps and 29.97 fps content - which is pretty much all late-90s sci-fi and fantasy TV. Generally speaking, older videos often deinterlace / detelecine better in AviSynth and VapourSynth than in paid applications like DaVinci Resolve or Adobe Premiere. The QTGMC filter available in both AviSynth and VapourSynth is a great deinterlacer and image processor. Deinterlace With AVS/VS, Not TVEAIĭetelecine/deinterlace in either AviSynth or VapourSynth, not Topaz VEAI. Upscale this new version of your video and drop it on top of the stack. Is it over-sharpened in a few places beyond what the original video can help you diminish? Spin up a custom set of filters in AVS/VS to deliberately smooth and soften your source file. Is your final upscale too strong? Drop the original video back on top at 15-25 percent opacity. With blending, you can take advantage of these differences to precision-target how you want your final videos to look. Preprocessing strengthens line detail in Artemis High Quality and weakens it in v1_Kiwami. Topaz doesn’t do nearly as much additional denoising when given the filtered file, but it does apply an entirely different level of sharpening across the scene. Cupscale’s Kemono_ScaleLite_v1_Kiwami model treats pre-processing in Hybrid as a reason to create a much smoother video with less noise and weaker lines. What’s fascinating is that the two outputs are affected very differently. Note that both Cupscale and TVEAI are impacted by pre-processing the video, as we’ve previously stated. Here’s a set of four frames upscaled from two different sources in both Cupscale and TVEAI. You can take advantage of these two facts to create virtually any kind of output you like. By pre-processing video differently, you can change what any given AI model sees and emphasizes. Different models treat the same content differently.Ģ). While blending outputs can make good-looking scenes even better, I primarily use it to improve low-quality scenes, not to extract higher and higher levels of detail from already good ones.

0 kommentar(er)

0 kommentar(er)